Autofs Ubuntu

Autofs Download for Linux (apk, deb, eopkg, rpm, tgz, txz, xz, zst) Download autofs linux packages for Alpine, ALT Linux, Arch Linux, CentOS, Debian, Fedora, KaOS, Mageia, OpenMandriva, openSUSE, PCLinuxOS, Slackware, Solus, Ubuntu. Autofs Download for Linux (apk, deb, eopkg, rpm, tgz, txz, xz, zst) Download autofs linux packages for Alpine, ALT Linux, Arch Linux, CentOS, Debian, Fedora, KaOS, Mageia, OpenMandriva, openSUSE, PCLinuxOS, Slackware, Solus, Ubuntu. Alpine Testing aarch64 Official.

Note (2016-07-03): This article still might be interesting if you want tolearn about autofs on Linux, but if you want to share files with a local VM Irecommend readingthis updated article instead.

There is this really cool feature on Unix systems called autofs. It is reallycrazy, really magical, and really convenient. In a previous life I had tomaintain what is likely the world's most complicated hierarchical autofs setup(distributed in an hierarchical manner across thousands of NetApp filers), so Ilearned a lot about autofs and how it works. In this post though, I'm just goingto explain what it is, why you'd want to use it, and how to set it up.

I'm explicitly going to explain the workflow for using autofs with a local VM,not how to use it in production.

Let's say you're in the habit of doing dev work on a VM. A lot of people do thisbecause they are on OS X or Windows and need to do dev work on Linux. I do itbecause I'm Fedora and need to do dev work on Debian, so I have a VM that runsDebian. You can set up autofs on OS X too. However, I don't use OS X so I can'texplain how to set it up. I believe it works exactly the same way, and it Ithink it's even be installed by default, so I think you can follow this guidewith OS X and it might be even easier. But no guarantees.

Now, there are a lot of ways to set it up so that you can access files on yourVM. A lot of people use sshfs because it'ssuper easy to set up. There's nothing wrong per se with sshfs, but it works viaFUSE and therefore iskind of weird and slow. The slowness is what really bothers me personally.

There's this amazing things calledNFS that has been availableon Unix systems since the 1980s and is specifically designed to be an efficientPOSIX network filesystem. It has a ton of features, you can do tons offine-grained control over your NFS mounts, and the Linux kernel has both nativesupport for being an NFS client and server. That's right, there's an NFSserver in the Linux kernel (there's also a userspace one). So if you're tryingto access remote files on a Linux system for regular work, I highly recommendusing NFS instead of sshfs. It has way higher performance and has beenspecifically designed from day one for Unix systems remotely accessingfilesystems on other Unix systems.

Setting up the NFS Server on Debian

There's one package you need to install, nfs-kernel-server. To install it:

Now you're going to want to set this up to actually run and be enabled bydefault. On a Debian Jessie installation you'll do:

If you're using an older version of Debian (or Ubuntu) you'll have to futz withthe various legacy systems for managing system startup service e.g.by usingUpstart or update-rc.d.

Great, now you have an NFS server running. You need to set it up so that otherpeople can actually access things using it. You do this by modifying the file/etc/exports. I have a single line in mine:

This makes it possible for any remote system on the 192.168.124.0/24 subnetpossible to mount /home/evan with read/write permissions on my server withoutany authentication. Normally this would be incredibly insecure, but the defaultway that Linux virtualization works with libvirt is that 192.168.124.0/24 isreserved for local virtual machines, so I'm OK with it. In my case I know thatonly localhost can access this machine, so in my case it's only insecure insofaras if I give someone else my laptop they can access my VM.

Please check your network settings to verify that remote hosts on your networkcan't mount your NFS export since if they can you've exposed your NFS mount toyour local network. Your firewall may already disable NFS exports by default, ifnot just change the host mask. There are also ways to set up NFS authentication,but you shouldn't need to do that just to use a VM and that topic is outside thescope of this blog post.

Now reload nfs-kernel-server so it knows about the new export:

Update: It waspointed out to methat if you're accessing the VM from OS X you have to use high ports forautomounted NFS, meaning that in the /etc/exports file on the guest VM you'llneed to add 'insecure' as an export option.

Setting up the NFS Client on Debian/Fedora

On client you'll need the autofs package. On Fedora that will be:

and on Debian/Ubuntu it will be

I think it's auto-installed on OS X?

Again, I'm going to assume you're on a recent Linux distro (Fedora, or a modernDebian/Ubuntu) that has systemd, and then you'll do:

Before we proceed, I recommend that you record the IP of your VM and put it in/etc/hosts. There's some way to set up static networking with libvirt hosts,but I haven't bothered to figure it out yet, so I just logged into the machineand recorded its IP (which is the same every time it boots). On my system, in my/etc/hosts file I added a line like:

So now I can use the hostname d8 as an alias for 192.168.124.252. You canverify this using ssh or ping.

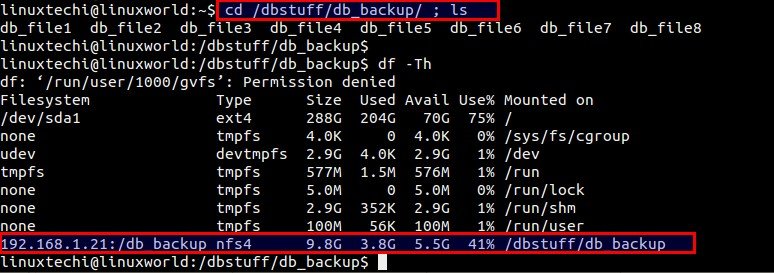

Due to some magic that I don't understand, on modern NFS systems there's a wayto query a remote server and ask what NFS exports it knows about. You can querythese servers using the showmount command. So in my case I now see:

This confirms that for the host alias d8 I added that the host is actuallyexporting NFS mounts in the way I expect. If you don't have a showmountcommand you may need to install a package called nfs-utils to get it.

Actually Using Autofs

Now here's where it gets magical.

When you do any filesystem operation (e.g. change directory, open a file, list adirectory, etc.) that would normally fail with ENOENT, the kernel checks ifthat file should have been available via autofs. If it is, it will mount thefilesystem using autofs and then proceed with the operation as usual.

This is really crazy if you think about it. Literally every filesystem relatedsystem call has logic in it that understands this autofs thing and cantransparently mount remote media (which doesn't just have to be NFS, this usedto be how Linux distros auto-mounted CD-ROMs) and then proceeds with theoperation. And this is all invisible ot the user.

There's a ton of ways to configure autofs, but here's the easiest way. Your/etc/auto.master file will likely contain a file like this already (if not,add it):

This means that there is a magical autofs directory called /net, which isn't areal directory. And if you go to /net/<host-or-ip>/mnt/point then it willautomatically NFS mount /mnt/point from <host-or-ip> on your behalf.

Autofs Mount Options

If you want to be really fancy you can set /etc/auto.masster to use a timeout,e.g.:

So here's what I do. I added a symlink like this:

So now I have an 'empty' directory called ~/d8. When I start up my computer, Ialso start up my local VM. Once the VM boots up, if I enter the ~/d8 directoryor access data in it, it's automatically mounted! And if I don't use thedirectory, or I'm not running the VM, then it's just a broken symlink.

This solves a major problem with the conventional way you would use NFS with aVM. Normally what you would do is you'd have a line in /etc/fstab that has thedetails of the NFS mount. However, if you set it up to be automatically mounted,you have a problem where your machine will try to mount the VM before the VM isfinished booting. You can use the user option in your /etc/fstab optionsline which lets you subsequently mount the VM NFS server without being root, butthen you have to manually invoke the mount command once you know the VM isstarted.

Autofs Ubuntu 16

By using autofs, you don't ever need to type the mount command, and you don'teven need to change /etc/fstab. I recommend editing /etc/hosts because it'sconvenient, but you don't need to do that either. I could have just as easilyused /net/192.168.124.252/home/evan and not created a hosts entry.